Geometry compression was always an important topic. No coincidence that one of the most important Unreal Engine 5 features was Nanite, which allowed a massive quality increase over previous versions of UE and competitors. The biggest issue here is that geometry is used in very different ways and thus limited by very different parts of the GPU pipeline. Nanite addressed static use cases. It allowed importing of movie-class assets with millions of polygons into UE, working flawlessly. But there are still many features that Nanite cannot cover, and most of them are related to animated applications.

The most important requests from our first ZibraVDB customers are to add destruction, particles, and water simulation on top of the volumetric effect, which would allow them to fully close VFX production inside UE. Indeed, it is hard to believe that explosions you usually simulate in Houdini do not have any hard particles, pieces, and shards of destroyed environment. UE already has a solution for that called Geometry Cache, but it has the same flaw as any other straightforward solution – streaming.

Our Solution

With ZibraVDB, we solved it by drastically reducing asset size and light-speed decompression. Now it’s time to talk about something similar in other domains – animated mesh compressing.

Generally speaking, the idea is the same, but the implementation is different and even more challenging.

Lossy compression is based on the idea of removing spatial redundancy, which means in the case of meshes, which are basically two-dimensional data, unlike OpenVDB, which has three dimensions.

First, it affects the compression rate, which becomes significantly lower. One can argue here that we already have geometry codecs like Google Draco with a good compression rate up to 30x. And you’ll be right, but our goal is not only compression by itself, but also real-time rendering. Codec should be GPU-friendly first to solve problems.

Another example of such codec we can recall is the AMD DGF - Dense Geometry Format. It is GPU-friendly and very fast, but it is designed to compress topology only – vertices and indices. And from the latest updates from AMD, it is designed to accelerate Ray Tracing, which actually makes a lot of sense for this application, but it does not compress all attributes. When we speak about “mesh”, we have to keep in mind that a particular vertex is not defined by index and position. For actual rendering, it requires lots of additional data like normals, UVs, bitangents, vertex colors, velocity vectors, etc. It requires a very different approach from what AMD designed.

We developed a new approach that heavily relies on one of the most recent GPU features – Mesh Shaders. Subdividing mesh into meshlets at the compression stage and performing decompression in both the Amplification and Mesh shader stages allows fully parallel rendering of animated sequences with millions of vertices per frame.

Need to add here that we had to make some customizations to Unreal Engine to make it work. First of all we extended RHI to allow writing UAV buffers on Amplification and Mesh shader stages - this gave us the possibility to store data between these stages. Second modifications were related to shadow rendering - shadow map atlases, shadow map cubes, and virtual shadow map renderer. With these changes, we made custom shadow map rendering for our geometry.

Since mesh rendering is required in multiple passes, unlike volumetrics, it puts even harder performance requirements on us. In Unreal Engine, geometry is usually rendered several times:

- Visibility pass with positions for the late Z buffer.

- Shadows pass - it can actually be rendered several times here.

- Base Pass with all attributes.

This is one of the main reasons why Geometry Cache struggles, because it needs to stream data multiple times from the CPU to the GPU. In NVidia NSight, it looks like this:

.avif)

Blue bars show the intensity of PCI Express, which has to copy data multiple times. Note that the beginning of the frame contains a huge 2.2ms CopyBufferRegion chunk, which ZibraGDS simply doesn’t have.

Our rendering pipeline uses several crucial differences:

- Visibility prepass for decompressed geometry, which fills the indirect buffer with visible meshlets.

- GPU resident buffer for compressed geometry.

- ExecuteIndirect invocation for all passes.

Combined, this approach removes the need for streaming data from CPU to GPU and readbacks for CPU-instantiated draw calls. All these combined give us the following picture:

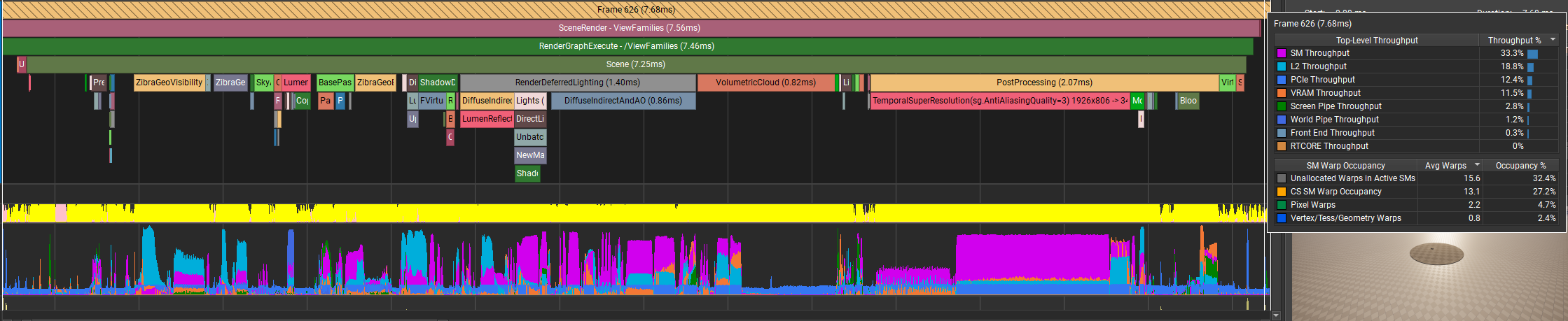

Purple and cyan bars are showing SM (compute) and L2 (cache) throughput limitations, which are typical for any optimized GPU pipeline.

It comes with some cost, of course – you need to store everything in VRAM, but on the other hand, it not only allows you to render heavy assets, but also to load them into UE at all!

These graphs above were obtained when we tested an animated Alembic sequence with 500k vertices per frame and 300 frames, a total size of 11GB.

When we tried to test a 92GB sequence with 1M vertices, 650 frames each, using Geometry Cache, UE simply crashed (on a 64GB RAM system). Same sequence imported with no problems using ZibraGDS and occupied a total of 11GB of VRAM with a 5.6GB size of compressed asset.

Results

You can look at our first metrics with visual examples in our teaser video. Worth mentioning that Geometry Cache produces super inconsistent performance metrics between different hardware. All metrics in the video were taken on a laptop MSI Stealth 16 AI Studio A1V.

Our technology is mostly designed for Virtual Production studios, which use powerful GPUs and can spend several milliseconds on Alembics on the scene. But we believe many others will find it also useful since it accelerates not only rendering but also import times and is faster to work with than Geometry Cache.

Next Steps

Point clouds and curves

While mesh compression is the main use case, we plan to extrapolate our technology for other types of geometry. Point clouds will be useful since the Houdini Niagara plugin for particles has the same issues as Geometry Cache. And curves might be useful for caching hair.

Streaming of compressed data for large assets

Right now, ZibraGDS stores compressed data in the VRAM. But on really long sequences, even compressed data might take a lot of space. Optional streaming of compressed data might be useful for such cases.

Support for translucent and masked materials

So far, we have only experimented with opaque materials. Supporting other types of surfaces might be trickier since culling won’t be as efficient. But we plan to add support for all surface types anyway.

Support for different input file types

At the current stage, ZibraGDS accepts an Alembic file as input. This is not a technical limitation, and we do plan to add support for USD and other file types.

Performance

Performance is an evergreen task in 3D graphics, and we’ll work on it at all stages of feature development.

Though ZibraGDS is currently at an early stage, there are so many things we plan to do. This is only our first step, and there will be much more to show. If you have a need for animated meshes for destruction, liquids, crowds, etc., apply to the ZibraGDS waitlist and we’ll make your content even more amazing and faster delivered than before!